Google has released what it’s calling a new “reasoning” AI model — but it’s in the experimental stages, and from our brief testing, there’s certainly room for improvement.

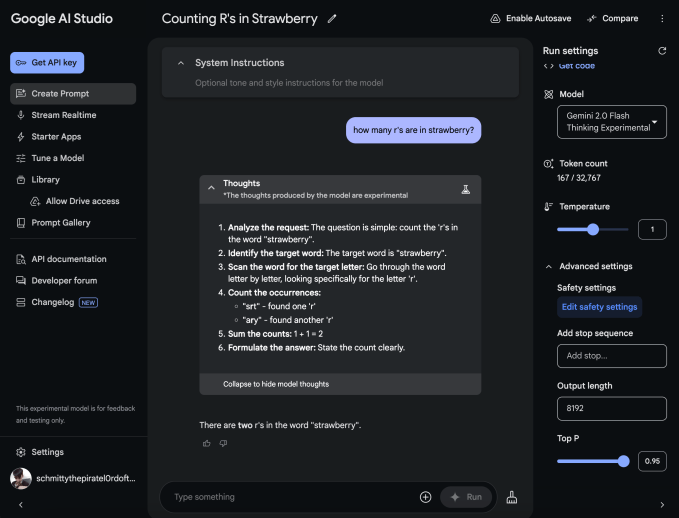

The new model, called Gemini 2.0 Flash Thinking Experimental (a mouthful, to be sure), is available in AI Studio, Google’s AI prototyping platform. A model card describes it as “best for multimodal understanding, reasoning, and coding,” with the ability to “reason over the most complex problems” in fields such as programming, math, and physics.

In a post on X, Logan Kilpatrick, who leads product for AI Studio, called Gemini 2.0 Flash Thinking Experimental “the first step in [Google’s] reasoning journey.” Jeff Dean, chief scientist for Google DeepMind, Google’s AI research division, said in his own post that Gemini 2.0 Flash Thinking Experimental is “trained to use thoughts to strengthen its reasoning.”

“We see promising results when we increase inference time computation,” Dean said, referring to the amount of computing used to “run” the model as it considers a question.

It’s still an early version, but check out how the model handles a challenging puzzle involving both visual and textual clues: (2/3) pic.twitter.com/JltHeK7Fo7

— Logan Kilpatrick (@OfficialLoganK) December 19, 2024

Built on Google’s recently announced Gemini 2.0 Flash model, Gemini 2.0 Flash Thinking Experimental appears to be similar in design to OpenAI’s o1 and other so-called reasoning models. Unlike most AI, reasoning models effectively fact-check themselves, which helps them avoid some of the pitfalls that normally trip up AI models.

As a drawback, reasoning models often take longer — usually seconds to minutes longer — to arrive at solutions.

Given a prompt, Gemini 2.0 Flash Thinking Experimental pauses before responding, considering a number of related prompts and “explaining” its reasoning along the way. After a while, the model summarizes what it considers to be the most accurate answer.

Well — that’s what’s supposed to happen. When I asked Gemini 2.0 Flash Thinking Experimental how many R’s were in the word “strawberry,” it said “two.”

Your mileage may vary.

In the wake of the release of o1, there’s been an explosion of reasoning models from rival AI labs — not just Google. In early November, DeepSeek, an AI research company funded by quant traders, launched a preview of its first reasoning model, DeepSeek-R1. That same month, Alibaba’s Qwen team unveiled what it claimed was the first “open” challenger to o1.

Bloomberg reported in October that Google had several teams developing reasoning models. Subsequent reporting by The Information in November revealed that the company has at least 200 researchers focusing on the technology.

What opened the reasoning model floodgates? Well, for one, the search for novel approaches to refine generative AI. As my colleague Max Zeff recently reported, “brute force” techniques to scale up models are no longer yielding the improvements they once did.

Not everyone’s convinced that reasoning models are the best path forward. They tend to be expensive, for one, thanks to the large amount of computing power required to run them. And while they’ve performed well on benchmarks so far, it’s not clear whether reasoning models can maintain this rate of progress.

TechCrunch has an AI-focused newsletter! Sign up here to get it in your inbox every Wednesday.

Leave a Reply